RabbitFarm

2020-08-27

All Combinations Equal to a Sum in Perl and Prolog

A problem that comes up surprisingly often is that we want to determine lists of numbers which sum to some given number S.

For example, let's look at Perl Weekly Challenge 075

You are given a set of coins @C, assuming you have infinite amount of each coin in the set.

Write a script to find how many ways you make sum $S using the coins from the set @C.

Example:

Input:

@C = (1, 2, 4)

$S = 6

Output: 6

There are 6 possible ways to make sum 6.

a) (1, 1, 1, 1, 1, 1)

b) (1, 1, 1, 1, 2)

c) (1, 1, 2, 2)

d) (1, 1, 4)

e) (2, 2, 2)

f) (2, 4)

Such a problem is a nice application for a logic programming approach!

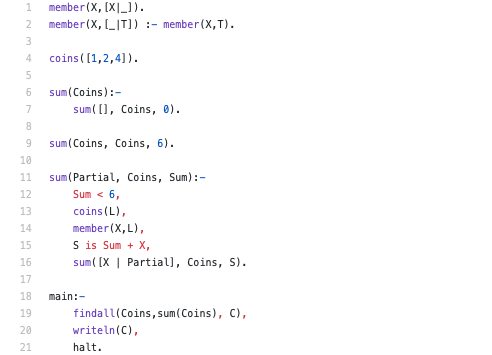

Here is a first attempt with a pure Prolog solution (with SWI-Prolog).

Sample Run

$ swipl -s prolog/ch-1.p -g main

[[1,1,1,1,1,1],[2,1,1,1,1],[1,2,1,1,1],[1,1,2,1,1],[2,2,1,1],[4,1,1],[1,1,1,2,1],[2,1,2,1],[1,2,2,1],[1,4,1],[1,1,1,1,2],[2,1,1,2],[1,2,1,2],[1,1,2,2],[2,2,2],[4,2],[1,1,4],[2,4]]

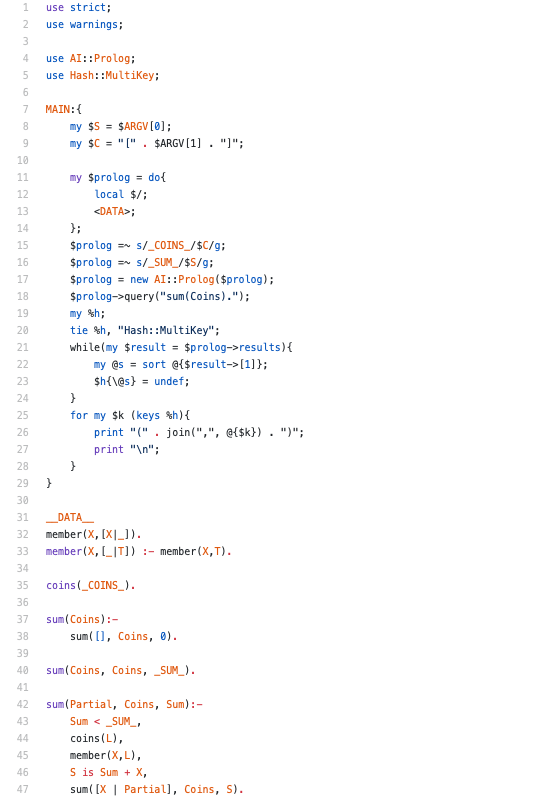

We have some duplicate solutions to clean up, but since this is a Perl centric challenge let's do it in Perl and while we're at it, we'll also have Perl handle the input and output.

Sample Run

$ perl perl/ch-1.pl 6 "1,2,4"

(1,1,1,1,2)

(1,1,4)

(1,1,1,1,1,1)

(1,1,2,2)

(2,2,2)

(2,4)

The deduplication is handled by storing the resulting lists as hash keys. In order to use array references as hash keys we need to use Hash::MultiKey. The target sum and coin value list are set in the Prolog code via string substitution. Of course these could have been set as parameters and passed in the normal way but this seemed slightly more fun!

posted at: 21:37 by: Adam Russell | path: /perl | permanent link to this entry

Artificial Recognition

Let's refer to most all of the current uses of Neural Networks as Artificial Recognition.

The present wave of enthusiasm for Artificial Intelligence has been due to the impressive success of Artificial Neural Networks. We are in the midst of a Cambrian Explosion of Deep Learning techniques which offer a demonstrably powerful solutions to an impressive range of problems: self-driving cars, product recommendations, image recognition, clinical diagnostic, and on and on.

Should we really be calling this intelligence though? For nearly 80 years what we call Artificial Intelligence has changed and undergone categorical revisions. We know have ideas such as Strong AI, Weak AI, Semantic AI, Symbolic AI, Statistical AI, and so on. There is, naturally, overlap in the things these terms refer to. For example, if I use a modern framework such as Google's Tensorflow to do a binary classification of images into two classes "contains stop sign" and "down not contain stop sign" we are using statistical methods to perform a Weak AI task.

Is recognizing stop signs in that example based on anything we could realistically call "intelligence"? Of course not! Not directly anyway. We could argue that the intelligence is not artificial at all! Instead it is a reflection of the real natural intelligence of the humans who have correctly arranged and labelled the images which the predictive model has been trained on. The training process for this model reads in the images and, in simple terms, stores a set of numbers so that the for any image provided from that training set the correct label ("stop sign"/"no stop sign") is calculated. We then hope that this training set of images is properly representative of reality. In this way a new image that the model has never seen before receives the proper classification. If we have a massive training set that has every conceivable stop sign configuration (stop signs laying at a weird angle because they got hit by a car, stop signs covered in graffiti, stop signs rusted in half, etc) then the model will appear to actually be intelligent! If we have a training set which is not representative of the real world the model will fail to give a correct result in many fairly common scenarios.

You can see why this would be referred to as Weak AI. There is a complete failure to generalize, the power of the predictive model is entirely dependent on how it has been trained and even at its most powerful it could only be effective at stop signs.

We can now understand what a General AI (Strong AI) would be. Even a young child would be able to see one or two stop signs and be able to recognize one whether or not it had been hit by a truck or covered in graffiti. That is the power to generalize.

I would argue that this Weak AI, especially as currently practiced is so far from any rational concept of intelligence that we drop the term altogether! After all, we are not really accomplishing a task based on intelligence but a fairly convincing simulacrum of recognition. The term pattern recognition is common and completely appropriate. Whether we are recognizing stop signs, detecting medical abnormalities, uncovering insurance fraud, or predicting what movies a person might like we are fitting the input to a pre-defined filter of known patterns.

Broadly describing this as merely pattern recognition is perhaps a bit too dismissive. After all, this is far more than just fitting square pegs in square holes. Inarguably there is something so impressive, so outwardly reflective to our own recognition abilities, it deserves distinct categorization.

Instead of use using terms such as intelligence let's call this Artificial Recognition. This term is seldom used for other purposes and for nothing similar. See [1], [2], and [3] for mostly orthogonal uses of the term as it relates to computer and biological vision. It appears the term is not widely adapted in those fields and it would be appropriate to claim it!

When we try to categorize things as being intelligent we create a false impression in the general public which then has to be explained away. Why create the confusion and potential for disappointment in the first place? The use of the phrase Artificial Recognition avoids an implicit overpromising of capabilities.

References

[1] http://www.cnbc.cmu.edu/~tai/readings/nature/sinha.pdf

[2] https://www.nonteek.com/en/comparing-human-and-artificial-image-recognition-some-considerations/

[3] https://apps.dtic.mil/dtic/tr/fulltext/u2/a454724.pdf

posted at: 16:33 by: Adam Russell | path: /ai | permanent link to this entry